This solution demonstrates how we could build a serverless ETL solution with Amazon Eventbridge, Kinesis Firehose, AWS Glue.

-

Amazon Eventbridge is a high throughput serverless event router used for ingesting data, it provides out-of-the-box integration with multiple AWS services. We could generate custom events from multiple hetrogenous sources and route it to any of the AWS services without the need to write any integration logic. With content based routing , a single event bus can route events to different services based on the match/un-matched event content.

-

Kinesis Firehose is used to buffer the events and store them as json files in S3.

-

AWS Glue is a serverless data integration service. We've used Glue to transform the raw event data and also to generate a schema using AWS Glue crawler. AWS Glue Jobs run some basic transformations on the incoming raw data. It then compresses, partitions the data and stores it in parquet(columnar) storage. AWS Glue job is configured to run every hour. A successful AWS Glue job completion would trigger the AWS Glue Crawler that in turn would either create a schema on the S3 data or update the schema and other metadata such as newly added/deleted table partitions.

-

Glue Workflow

- Glue Crawler

- Datalake Table

- Once the table is created we could query it through Athena and visualize it using Quicksight.

Athena Query

- This solution also provides a test lambda that generates 500 random events to push to the bus. The lambda is part of the test_stack.py nested stack. The lambda should be invoked on demand. Test lambda name: serverless-event-simulator-dev

This solution could be used to build a datalake for API usage tracking, State Change Notifications, Content based routing and much more.

-

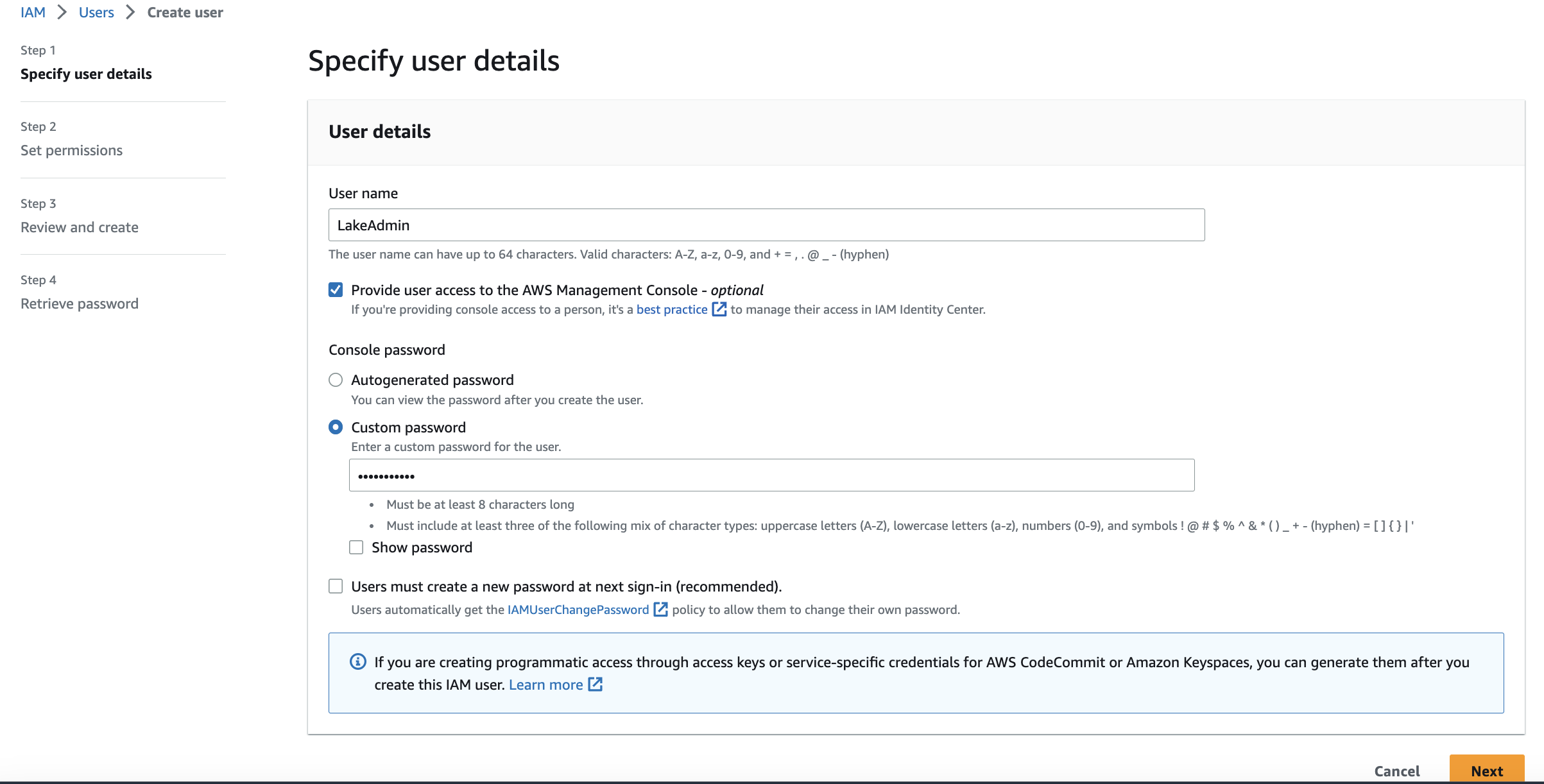

Create an new IAM user(username = LakeAdmin) with Administrator access.Enable Console access too

-

Once the user is created. Head to Security Credentials Tab and generate access/secret key for the new IAM user.

-

Copy access/secret keys for the newly created IAM User on your local machine.

-

Search for AWS Cloudshell. Configure your aws cli environment with the access/secret keys of the new admin user using the below command on AWS Cloudshell

aws configure

-

Git Clone the serverless-datalake repository from aws-samples

git clone https://github.com/aws-samples/serverless-datalake.git -

cd serverless-datalake

cd serverless-datalake

- Fire the bash script that automates the lake creation process.

sh create_lake.sh

-

After you've successfully deployed this stack on your account, you could test it out by executing the test lambda thats deployed as part of this stack. Test lambda name: serverless-event-simulator-dev . This lambda will push 1K random transaction events to the event-bus.

-

Verify if raw data is available in the s3 bucket under prefix 'raw-data/....'

-

Verify if the Glue job is running

-

Once the Glue job succeeds, it would trigger a glue crawler that creates a table in our datalake

-

Head to Athena after the table is created and query the table

-

Create 3 roles in IAM with Administrator access -> cloud-developer / cloud-analyst / cloud-data-engineer

-

Add inline Permissions to IAM user(LakeAdmin) so that we can switch roles

{

"Version": "2012-10-17",

"Statement": {

"Effect": "Allow",

"Action": "sts:AssumeRole",

"Resource": "arn:aws:iam::account-id:role/cloud-*"

}

}

- Head to Amazon Lake formation and under Tables, select our table -> Actions -> Grant privileges to cloud-developer role 16.a: Add column level security

- View Permissions for the table and revoke IAMAllowedPrincipals. https://docs.aws.amazon.com/lake-formation/latest/dg/upgrade-glue-lake-formation-background.html

-

In an incognito window, login as LakeAdmin user.

-

Switch roles and head to Athena and test Column level security.

-

Now in Amazon Lake Formation (Back to our main window), create a Data Filter and add the below a. Under Row Filter expression add -> country='IN' a.1 https://docs.aws.amazon.com/lake-formation/latest/dg/data-filters-about.html b. Include columns you wish to view for that role.

-

Head back to the incognito window and fire the select command. Confirm if RLS and CLS are correctly working for that role.

-

Configurations for dev environment are defined in cdk.json. S3 bucket name is created on the fly based on account_id and region in which the cdk is deployed